XCentium Blogs

Technical

Google Analytics Project

I would like to share some notes from my most recent effort in our GA practice.

Use Case:

Our client has multiple web sites for which they were keeping basic page visit information. They wanted to add some session-level information to allow them to tie back to session-level information they were saving from their application. They also wanted to have some information about 2 pages with some complex user interaction so they could improve the user experience. Then they wanted to retrieve this information from Google Analytics and load back into their database so they could join to it for reporting purposes.

Classic or Universal Analytics?

Our client was already using Classic Analytics. Universal Analytics was in early Beta. We could not find anything definitive about the Beta end date, or level of stability. We didn’t want to expose the client to the risk of needing some rush engagement with us to change code, if Universal Analytics changed during or after beta. To avoid that headache for them, we decided to keep using Classic Analytics.

Universal Analytics came out of Beta on April 2, 2014, after we’d completed this part of the project. We’ll use that for any future projects. While there are significant differences, we could do anything in Universal Analytics as easily as we did in the Classic version. I’ll mention any differences below.

Session-level information:

Our client wanted to get data from GA at the session level to be able to tie it back to other session information they were already keeping in a database.

Given we were using Classic Analytics, our solution involved using Custom Variables at the Session level. We used 1 custom variable to store a concatenation of our 3 extra pieces of information because of the following considerations:

When you query GA for sessions, you specify Dimensions and Metrics. What you get back are the values of the metrics aggregated over the dimensions. This is analogous to dimensions and measures in Data Warehousing, or even Pivot Tables in Excel. In this case, our custom variables would be used like Dimensions. Unlike Excel, rows that have one more dimensions with no value are not reported. We could have inserted a value of “(not set)” into each dimension to get around this. But, since one of our data items was always set, we always had a value in one of the fields.

The other consideration was simplifying data retrieval. When you query GA using the API, you may only have 7 dimensions in a query. If you use 3 of them getting the session level information and you want more than 4 other dimensions, you have to make additional queries.

As an aside, in Universal Analytics, we would have used Custom Dimensions, which have replaced Custom Variables. You can have up to 20 custom dimensions and 20 custom measures. They can be used directly with the web reporting interface.

Track field-level information:

Our client wanted to keep information about form fields that were updated or that failed validation for two key pages. They wanted to use this information to identify any troubles users were having filling out the forms so they could improve the pages.

We used GA Events to implement this requirement. You create events with the following data: Category, Action, Labels, Values and a boolean variable that controls the use of events in bounce-rate calculation.

To leave our options open for other events, we used Category and Action to indicate this was a field update or a field validation. The Label field was used for the field name. If the client had wanted an exact order of field events, we would have also added a time stamp in the label.

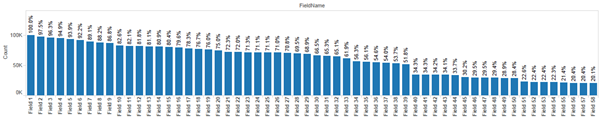

Without revealing any client-confidential information, you can still see the results from this information were reasonably informative. In the chart below, the fields displayed in normal order of entry. People using this site generally dropped out fairly steadily, until they hit Field 40 where 37% of the remaining people dropped out.

Factoring in GA Data Collection Limits:

With field tracking, we were setting up a solution that hit GA quite a bit. There are limits to the number of “hits” that can be made in various time periods. We needed to make sure we didn’t exceed any of these limits.

The limits that were relevant are listed below, based on the published collection limits for standard Google Analytics:

- Hits per session: 500

- Hit rate per property: 1 event per second, although there is some allowance for small bursts, occasionally.

- Hits per property per month: 10 M.

There are also limits to Reporting API Requests. These were much larger that the requests we expected to make. The same was true with the limits that trigger sampling.

We took a look at the statistics the client had at that point, number of sessions, process completion counts, etc. With that information, we discussed expected traffic changes with them. Then we made estimates of the various number of hits, which we then increased a bit, just to be sure. The sorts of statistics we used were:

- Max sessions per day

- Average page views per session,

- We wanted to track field-by-field on 2 pages. For each one we estimated:

- Probability of a session hitting that page.

- Number of fields we wanted to track.

- Number of expected validation errors.

We found we would be over by roughly 25% for the hit rate per property per month. We also had a concern about hit rate per second per property for events coming from validation failures. It seemed perfectly reasonable that we could exceed the 10 hits per second in a single session somewhat-regularly.

We resolved these concerns as follows:

- Since the client wanted to know only what fields were updated, we kept track of fields we had already reported and only reported once.

- To solve some of the hit-per-second problems involving validation failure, we concatenated all the field names failing validation into a comma-separated list. That list is what we stored in the event label. Because we were going to export our data from GA, we could split the concatenated list back into one field per event in the export process. We needed to keep in mind that the maximum label length was 2048 bytes.

- Further discussion with the client about how they would use the data led to only reporting validation errors for any field once per session, which further decreased the hit count.

- Even with the changes above, there was still no guarantee the site would not exceed the hit rates per property per second. We discussed this with our client who decided the data would still be usable if we missed some hits.

Extracting Data from GA:

Our requirements included retrieving data from GA because the client wanted to join the information with other data they were keeping in a SQL Server database. Having all the data in 1 place simplified reporting.

In addition, since we were concatenating fields failing validation into comma-separated lists, we could split out the lists into individual validation errors to simplify the query process.

GA has APIs to simplify data retrieval. We used these to retrieve data and place into delimited files. We used Microsoft SSIS to load into their SQL Server database. It would also have been possible to make a custom SSIS Data Source. There is a beta version of one on Codeplex now. Given the differences in coding complexity, and some learning curve for debugging, this solution might be best for programming organizations that are using SSIS for more than 1 project.

Code:

The coding was relatively straightforward. The GA examples are easy to understand and don’t have very many surprises.

We did have a few complications getting the session level information squared away.

We wrote a JQuery plugin that unobtrusively added a field change event handler to the fields we were tracking. We revised our validation plugin to execute another function in our GA plugin to record validation errors.

Testing our plugins was straightforward with the real-time data found in GA Web Reporting. We had a few issues with latency, where the custom fields were concerned. We figured this out using the GA API Explorer and a little luck.

We used the GA API Explorer to experiment with the queries we needed for our data, then wrote a console application we run from SSIS to make the comma-separated files of GA information. Because we had to have multiple queries to get all the data we wanted, we joined this information in SSIS when loading from the scrub tables. There were a few minor complications because of data availability for all the dimensions that we resolved by making sure we had all the session values, which were the key, in one query and then outer-joining the other data.

Conclusion:

Using the features of Google Analytics, we were able to help our client gain a complete understanding of site interaction. They used this insight, along with information they collected by session to improve their site for users. They reaped benefits as a result. The effort was not 100% straightforward because of the data collection limits of Google Analytics and the level of detail the client wanted. Still, we were able to do what was necessary because we could work with our client to get a good understanding of their needs and how best to serve them.