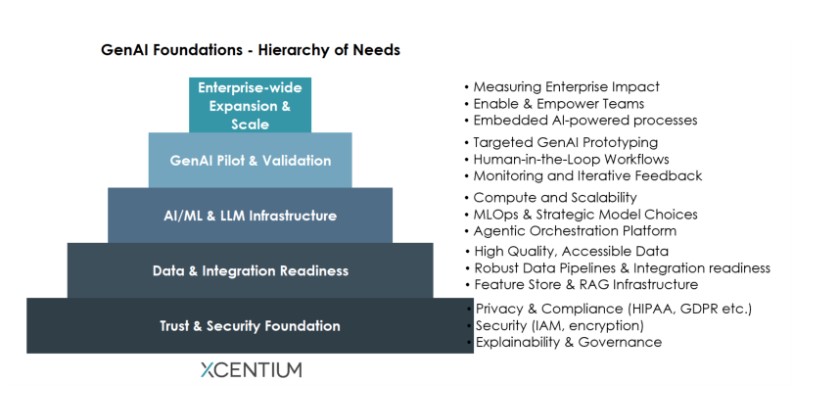

Generative AI (GenAI) promises unprecedented opportunities to transform business processes, enhance decision-making, and unlock new sources of value. Yet, GenAI initiatives often face high failure rates, with Gartner projecting over 40% of Agentic AI projects will be cancelled by the end of 2027[2]. Many organizations rush to deploy AI pilots without first establishing the solid foundations required for safe, scalable, and impactful adoption. Just as individuals must first satisfy foundational needs like safety and belonging before reaching self-actualization, as outlined in Maslow’s Hierarchy of Needs[1], enterprises must also progress through a structured hierarchy to unlock GenAI’s full potential. This journey starts with trust and security, advances through data readiness and a robust AI platform, moves into pilot validation, and culminates in enterprise-wide transformation. In this article, we explore a practical framework to help leaders chart a clear path to realizing GenAI’s true potential while ensuring long-term success and organizational alignment.

We cover each of the layers below, starting from the base layer.

Trust & Security Foundation

A strong GenAI adoption centres on trust. This layer ensures that privacy, security, and compliance are embedded throughout every subsequent layer of AI adoption.

Privacy & Compliance

Companies need to meet stringent regulatory standards such as GDPR, CCPA, and HIPAA etc. For example, a healthcare provider deploying GenAI for patient communications must ensure all generated content is HIPAA-compliant and does not inadvertently share sensitive information.

Security

Implementing robust identity and access management (IAM) frameworks — including techniques like single sign-on (SSO) and role-based access control (RBAC) — helps control who can access sensitive data and AI models. These controls should be complemented by strong network and data protection measures. These safeguards become especially important when integrating new AI systems into core enterprise environments, such as customer-facing applications, financial platforms, and HR systems.

Explainability and Governance

Clear governance guardrails — including model usage policies, output tracking, and IP rights management — prevent misuse and help manage legal risk. Explainability, specifically, focuses on the ability to understand how and why an AI model arrives at a particular decision or output. This transparency is vital for fostering trust among stakeholders, meeting regulatory requirements, and identifying potential biases. Tools that enable model interpretability help audit AI behavior, and ensure the system aligns with organizational needs. Companies like financial institutions often use model interpretability tools to explain credit or fraud decisions to regulators and customers[3].

Data & Integration Readiness

Reliable, well-governed data and seamless integration capabilities are critical enablers for GenAI success. Even the best models cannot deliver value without access to quality data.

High-Quality, Accessible Data

Centralized data in datalakes or lakehouses — supported by strong metadata catalogs and clear data ownership — enable unified access. A retail company, for instance, might unify purchase history, online browsing behavior, and loyalty data to drive personalized marketing campaigns powered by GenAI[4].

Robust Data Pipelines & Integration Readiness

Robust ETL/ELT Pipelines pipelines ensure data is continuously cleaned, transformed, and made AI-ready — whether for real-time inference or batch workloads. High-quality, consistent data prevents the classic "garbage in, garbage out" trap.

Integrating GenAI to Core systems like ERP, CRM, and supply chain platforms unlocks end-to-end automation. For example, integrating AI into an ERP system could help automate demand forecasts and purchase order generation, reducing manual planning efforts[5].

Feature Store & RAG Infrastructure

Feature Stores ensure that the data features used to train an AI model are consistent with the features provided to the model during real-time inference. They are a critical part of the data pipeline that feeds reliable, high-quality data to the models.

For LLMs, Retrieval-Augmented Generation utilizing vector databases is essential to ground responses in trusted enterprise knowledge. With RAG, outputs are more contextual, up-to-date, and traceable — helping reduce hallucinations and ensuring alignment with proprietary content, which is critical for regulated industries and C-suite confidence.

AI/ML & LLM Infrastructure

This layer provides the technical backbone to develop, deploy, and scale GenAI solutions, supporting both experimentation and enterprise-grade production workloads.

Compute and Scalability

Modern AI workloads require high-performance GPU clusters or specialized cloud services. Without this computational horsepower, tasks that could take minutes or hours on optimized hardware might stretch into days, weeks, or even become practically impossible on standard CPUs, severely hindering development cycles and the ability to deploy real-time AI solutions [6]. However, it's crucial to note that many medium-sized organizations or those primarily leveraging external GenAI services (e.g., Gemini, OpenAI) may largely offload this intensive computational burden to their service providers, reducing the need for significant internal GPU infrastructure.

MLOps & Strategic Model Choices

Central to effective MLOps and LLMOps is automating the entire model lifecycle: from development to deployment, monitoring, and continuous updates[7]. This includes robust model registries for version control and traceability, efficient serving infrastructure for inference, and CI/CD pipelines that reduce operational friction and enhance reliability. For instance, a large logistics company would use a model registry to track versions of route optimization models across different regions, ensuring optimal deployment.

For leaders, a key strategic decision at this stage is the choice of Large Language Models (LLMs). While managed proprietary models (e.g., from OpenAI, Google, Anthropic) offer strong performance and fast onboarding, they can limit customization and raise concerns around data sovereignty. Open-source models (e.g., LLaMA, Mistral) or small language models (SLMs) offer greater control, often at lower cost, but require internal infrastructure and skills.

Agentic Orchestration Platform

This critical building block focuses on the tools and frameworks required to coordinate and manage autonomous AI agents. Agentic orchestration enables these intelligent entities to perform complex, multi-step tasks by breaking down goals, utilizing tools, making decisions, and collaborating. For instance, a claims processing agent might call a document parser agent, then hand results to a compliance agent, completing a task end-to-end with minimal human input. Companies can choose from a growing ecosystem of platforms for building and deploying agents, ranging from open-source frameworks like LangChain, AutoGen, and CrewAI, which offer flexibility and community support, to commercial platforms such as Google Cloud's Vertex AI Agent Builder, AWS Bedrock AgentCore, Azure AI Foundry, Salesforce agentforce, which often provide managed services, enterprise-grade security, and robust integrations.

GenAI Pilot & Validation

Early pilots help validate value, build confidence, and gather essential feedback before wider rollouts. This is where ideas start to become tangible.

Targeted GenAI Prototyping

Starting with targeted prototypes helps teams experiment safely. Prototypes could be those that promise efficiency gains through automating repetitive, knowledge-intensive tasks (e.g., drafting initial legal documents, generating marketing content, summarizing internal research materials), or deliver enhanced customer/employee experiences (e.g., personalized support chatbots, dynamic self-service portals). Prompt engineering at this stage helps refine responses and adapt models to brand tone.

Human-in-the-Loop Workflows

Including humans in the decision loop helps ensure outputs meet quality and safety standards. For example, legal departments might review AI-generated contract clauses before final approval[8].

Monitoring and Iterative Feedback

Real-time analytics dashboards and feedback mechanisms allow organizations to track usage trends, spot errors, and continuously improve. In an e-commerce context, monitoring AI-generated product recommendations can reveal opportunities to adjust for seasonal trends[9]. Invaluable insights gleaned from this pilot feedback loop directly inform the refinement of underlying data pipelines and optimization of prompt templates, ensuring these foundational assets are robust and reusable.

Enterprise-wide Expansion & Scale

At this stage, GenAI moves from pilots to embedded business processes, becoming an enterprise-wide growth catalyst that drives measurable impact across functions and geographies.

Embedded AI-Powered Processes

Seamlessly embedding GenAI into critical systems that drive business processes (e.g., order returns, customer service) allows organizations to orchestrate intelligent, end-to-end workflows. This often manifests as the 'Copilot' paradigm, where GenAI acts as an intelligent assistant embedded directly into widely used enterprise applications (e.g., CRM, ERP, productivity suites). For example, integrating an AI copilot with a CRM can enable hyper-personalized sales follow-ups or automatically prioritize leads, empowering sales teams to focus on high-value opportunities.

Enable & Empower Teams

Providing self-service innovation sandboxes empowers business teams to experiment safely with new GenAI use cases, fostering a culture of rapid innovation and decentralized problem-solving[10]. Establishing a dedicated AI Center of Excellence helps offer governance playbooks, prompt libraries, serve as internal capability hubs accelerating adoption across departments. These CoEs are pivotal not only in providing technical guidance but also in driving robust change management strategies and leading comprehensive GenAI upskilling programs for the workforce.

Measuring Enterprise Impact

Robust performance measurement frameworks allow organizations to track ROI and demonstrate concrete business outcomes. Executive dashboards can showcase metrics such as efficiency gains, revenue uplift, or customer satisfaction improvements, providing leadership with clear visibility. This includes continuous adaptation and cross-organizational rollout, ensuring models evolve with regional needs and market dynamics. For example, a global retailer might report on increased conversion rates and reduced customer service costs driven by AI-powered assistants.

Whether you're just starting to explore GenAI or scaling early experiments, taking a thoughtful, layered approach can make all the difference. By focusing on strong foundations and moving step by step, organizations can unlock real business value while building trust and momentum along the way. If you're thinking about what's next for your own GenAI roadmap, this hierarchy above can serve as a helpful guide to shape practical, impactful progress.

To support that journey, XCentium offers GenAI readiness assessments that help identify gaps, align opportunities with business goals, and set a secure,scalabe course for adoption. Contact us at xcentium.com/connect to start the conversation.