Sitecore Search offers many types of Sources – Web Crawlers, Advanced Web Crawlers, Feed Crawler, Sales Feed Crawler, API Crawler, API Push, PDFs, Localizer Crawler, etc.

You should identify the type of source you need based on the specific requirements of the project. These decisions often depend on data recency, latency, extensivity of the final value, etc. If your project is using API Push Source, this blog will help you to clarify some of the questions that I had to raise a support ticket for.

How to know what data was pushed to Ingestion API

When using API Push Sources in Sitecore Search, developers need to use Ingestion API to keep the source up to date. The ingestion API helps in updating, removing or adding new documents in near real time.

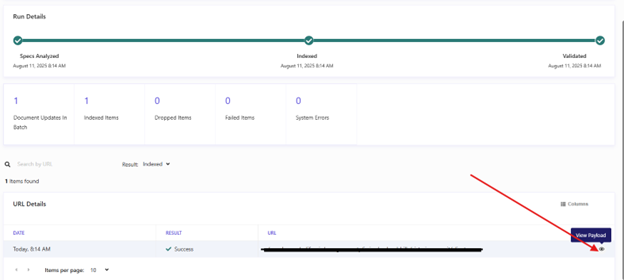

The ingestion API uses JSON payload for the CRUD operations. In the backend, you will create a JSON with operation like Update or Delete or Add to update the source. During development, the developers will need to verify the actual payload ingested by Sitecore search to ensure the data is correct. You will also need a raw JSON to debug any production issue in indexing. Sitecore search provides a way to view the final JSON that was ingested.

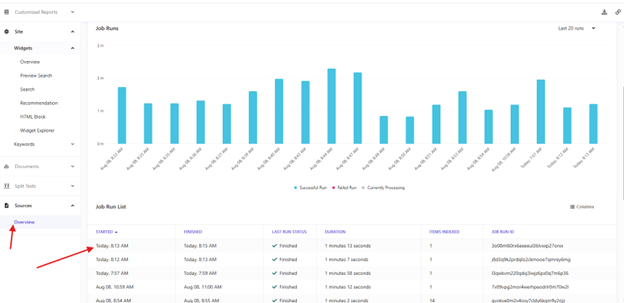

To look for the actual JSON consumed by Sitecore search, using the Search CEC, you can navigate to Analytics à Sources à Overview à Click on a source to navigate to the dashboard of the source. On this page, you can see various times the index source was updated, and you can drill down into each batch. Once you open the batch, you can click on any item “show” icon at the right most section next to the URL. This will show the JSON that was consumed by search.

Can I remove all documents from the API Push Source from the CEC?

During development, developers find that many junk data has been pushed to the source. This can be either due to testing, or sample data etc. Sometimes, there is a need to start with a clean source with no documents. There are a couple of options to do this

Using CEC, Archive and Delete source – These are two separate actions. To remove a source from domain, you need to first archive it. Then you need to remove it. This will work when your head is not relying on any source id to pull data from. So, you can create a new source (a new source id is generated) and you can start pushing to the new source after updating the configuration of the system that is pushing data to search using Ingestion API.

Remove items from Source using PowerShell or postman – Sometimes you will need to preserve the name and ID of the source because it has been shared with a lot of downstream systems. In such cases, you can query all the document IDs from the source and then call the delete ingestion API for each ID. This takes a little more time to delete all the items, but this is the only way to clear the source if you need to preserve the ID and Name of the source.

What about Parallel Workers in API Push Sources?

Those who are familiar with Web Crawlers, there is a setting in the crawler where you can set parallel number of threads for the crawler. This is useful for improving performance of the crawler so that it can index the website quickly using multiple threads.

When it comes to API Push sources, there is no such setting for parallel workers as of now. I have raised a support ticket, and it is being considered by the product team. In my scenario, about 60 content authors make changes to content that ultimately trigger an indexing via the edge webhook. So, you may see a bit of delay in the data getting ingested by the search if many items are being published by content author that trigger an update to the source using the ingestion API.

How do I call an Authenticated Serverless Function from Edge Webhook?

On Items Webhook, you could configure OAuth credentials so that it automatically requests a token for any webhook and pass the token to the receiver of the webhook. But on edge webhook, you don’t have such ability. So, I again raised a support ticket and the support recommended having a pass-through that adds the authentication and calls the final function. In my project, I use the head as the passthrough due to constraints by the customer. The publishing triggered edge webhook. The edge webhook was configured to call the passthrough API on the Head. The head then added the authentication and passed the request to the Azure Function. The Azure function then created the final JSON and passed that to the Ingestion API.

These are some of the minor things that you must keep in mind when working with API Push sources. If you want to get a deeper understanding of these and see it in action, please reach out to me on LinkedIn or using the Contact Us form.

References: