While Sitecore Search offers a compelling, well-integrated solution for Sitecore projects with extensive out-of-the-box functionality, exploring Azure AI Search can provide valuable insights into building custom search solutions. This post will delve into implementing Azure AI Search in Sitecore XM Cloud Next.js projects, showcasing its AI-powered capabilities and ease of use.

Introduction to Azure AI Search

Azure AI Search, formerly known as Azure Cognitive Search, is a cloud-based search-as-a-service solution that leverages advanced AI and natural language processing capabilities. It stands out for its ability to efficiently search and analyze unstructured data, making it an excellent choice for complex content management systems like Sitecore XM Cloud[2].

Key features that set Azure AI Search apart include:

1. AI-powered content enrichment

2. Natural language processing for improved search relevance

3. Image and text analysis capabilities

4. Support for over 50 languages

5. Seamless integration with other Azure services

Through my implementation, I found these features particularly useful for enhancing search functionality in Sitecore XM Cloud Next.js projects.

Setting Up Azure AI Search

To get started with Azure AI Search, follow these steps in the Azure portal:

1. First, create your Azure AI Search resource:

- Head to the Azure portal and click "Create a resource"

- Search for "Azure AI Search" and select it

- Select your subscription, resource group, and give it a meaningful name

- For testing purposes, the Basic pricing tier works well

- Click "Review + create" followed by "Create"

2. Next, configure your search service:

- Once your resource is ready, navigate to it

- Look for "Indexes" in the left menu to create your new index

- Set up your index schema with the appropriate fields, data types, and attributes

- Configure your data sources (I used Azure Blob Storage for Sitecore content)

- Set up indexers for automatic index updates

3. Finally, enable AI enrichment:

- Find "Skillsets" in your search service

- Create a new skillset and add the cognitive skills you need (I found entity recognition and language detection particularly useful)

- Connect the skillset to your indexer to enrich content during indexing

Sitecore Implementation Part 1: Building a Website Crawler with Azure Functions

While Azure AI Search comes with built-in indexers for various data sources like Azure Blob Storage, Azure SQL Database, and Cosmos DB, I noticed it lacked a web crawler similar to what Sitecore Search provides.

To bridge this gap, I developed a custom crawler using Azure Functions. This approach gave me several advantages:

- The ability to tailor the crawl specifically to our Sitecore XM Cloud site's structure

- Full control over crawl frequency and depth

- Flexibility in content extraction and metadata handling

Here's how I approached building the crawler with Azure Functions:

1. Set up the Azure Function App:

- Create a new Function App in the Azure portal o Choose Python as your runtime stack

2. Build the crawler function:

- Utilize BeautifulSoup4 for parsing HTML

- Create logic to navigate through your Sitecore XM Cloud site

- Extract the relevant content and metadata

3. Connect with Azure AI Search:

- Use the Azure SDK for Python to interface with your search service

- Handle the upload of crawled content to your search index

4. Set up scheduling:

- Configure a timer trigger using a cron expression for regular crawls

Crawler Azure Functions

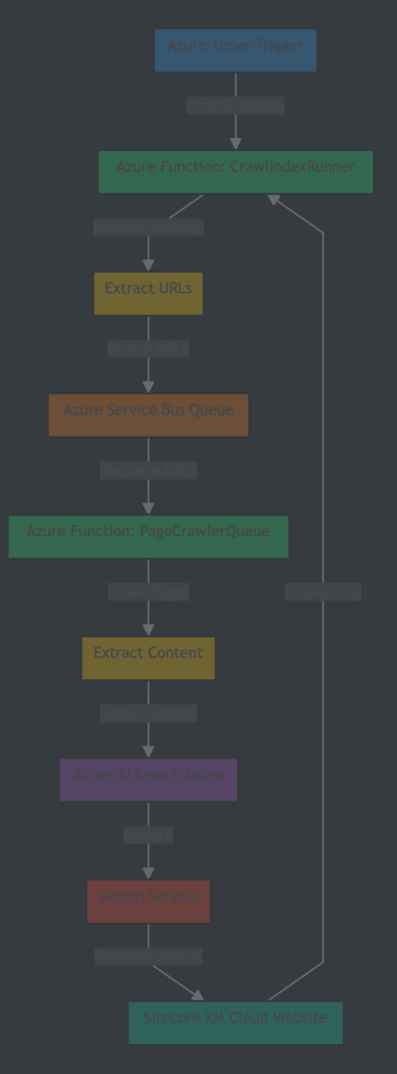

Here's a diagram that illustrates how the different components of our crawler system work together:

Let's dive into the core components of our crawler system. I'll walk you through each part and share some code snippets that demonstrate the key functionality.

CrawlIndexRunner

The CrawlIndexRunner is where it all begins. This Azure Function kicks off our crawling process by doing three main things:

1. Fetches the sitemap from your specified URL

2. Processes it to get all the URLs we need to crawl

3. Sends these URLs to an Azure Service Bus queue for processing

Here's a look at the key parts of the implementation:

// Azure Function triggered by a timer to initiate web crawling process

[Function("CrawlIndexRunner")]

public async Task RunAsync([TimerTrigger("%CrawlIndexSchedule%")] TimerInfo myTimer)

{

_logger.LogInformation("CrawlIndexRunner function executed at: {CurrentTime}", DateTime.UtcNow);

await ProcessSitemapAsync(_sitemapsRootUrl);

}

// Process the sitemap at the given URL

private async Task ProcessSitemapAsync(string url)

{

// Fetch and parse the sitemap XML

var response = await _httpClient.GetAsync(url);

var content = await response.Content.ReadAsStringAsync();

var doc = XDocument.Parse(content);

var ns = doc.Root.GetDefaultNamespace();

// Determine if it's a sitemap index or a regular sitemap

if (doc.Root.Name.LocalName == "sitemapindex")

{

await ProcessSitemapIndexAsync(doc, ns);

}

else

{

var urls = ExtractUrlsFromSitemap(doc, ns);

await ProcessSitemapUrlsAsync(url, urls);

}

}

// Process URLs extracted from a sitemap

private async Task ProcessSitemapUrlsAsync(string sitemapSource, List urls)

{

// Divide URLs into batches and send to Azure Service Bus

var batches = urls.Chunk(_batchSize);

foreach (var batch in batches)

{

// Create a message containing the batch of URLs

var message = new CrawlRequest

{

Source = sitemapSource,

Urls = batch.ToList()

};

// Send the message to Azure Service Bus

await _serviceBusClient.SendMessageAsync(

new ServiceBusMessage(JsonSerializer.Serialize(message))

{

ContentType = "application/json"

}

);

}

}

This function runs on a schedule defined by the CrawlIndexSchedule setting. It processes the sitemap, handles both sitemap indexes and regular sitemaps, and queues up URLs for crawling in batches.

PageCrawlerQueue & PageCrawlerBase

PageCrawlerQueue is an Azure Function triggered by messages in the Azure Service Bus queue. It processes these messages, which contain URLs to crawl. PageCrawlerBase is the base class that provides the core functionality for crawling web pages and indexing their content in Azure AI Search.

Key functionalities:

1. Message Processing (PageCrawlerQueue):

[Function("PageCrawlerQueue")]

public async Task RunAsync(

[ServiceBusTrigger("%ServiceBusQueueName%", Connection = "ServiceBusConnection")]

ServiceBusReceivedMessage triggerMessage,

ServiceBusMessageActions triggerMessageActions,

CancellationToken cancellationToken)

{

// Process the trigger message

if (triggerMessage?.Body is not null)

{

await ProcessMessageAsync(triggerMessage, receiver, cancellationToken);

}

// Process remaining messages

await ProcessRemainingMessagesAsync(receiver, cancellationToken);

}

2. Page Crawling (PageCrawlerBase):

///

/// Crawls a web page and extracts relevant information for indexing.

///

/// The URL of the page to crawl.

/// The source identifier for the crawled page.

/// A SearchDocument containing the extracted page information.

public async Task CrawlPageAsync(string url, string source)

{

// Fetch the HTML content of the web page

var htmlDoc = await _httpClient.GetStringAsync(url);

// Parse the HTML content

var doc = new HtmlDocument();

doc.LoadHtml(htmlDoc);

// Extract the page title

var title = doc.DocumentNode.SelectSingleNode("//title")?.InnerText.Trim();

// Extract the meta description

var metaDescription = doc.DocumentNode.SelectSingleNode("//meta[@name='description']")?.GetAttributeValue("content", "");

// Extract the main content of the page

var content = ExtractContent(doc);

// Create and return a SearchDocument with extracted information. This needs to match the schema defined in Azure AI Search

return new SearchDocument

{

["id"] = GenerateUrlUniqueId(url), // Generate a unique ID for the document

["url"] = url, // Store the original URL

["title"] = title, // Store the page title

["content"] = content, // Store the main content

["description"] = metaDescription, // Store the meta description

["source"] = source // Store the source identifier

};

}

3. Indexing (PageCrawlerBase):

///

/// Indexes a single SearchDocument in Azure AI Search.

///

/// The SearchDocument to be indexed.

/// A task representing the asynchronous operation.

private async Task IndexSearchDocumentAsync(SearchDocument document)

{

try

{

// Create a batch with a single document for uploading

var batch = IndexDocumentsBatch.Upload(new[] { document });

// Attempt to index the document in Azure AI Search

await _searchClient.IndexDocumentsAsync(batch);

// Log successful indexing

_logger.LogInformation("Successfully indexed document: {DocumentId}", document["id"]);

}

catch (Exception ex)

{

// Log any errors that occur during indexing

_logger.LogError(ex, "Error indexing document: {DocumentId}", document["id"]);

// In a production environment, you might want to implement retry logic here

}

}

These classes work together to process queued URLs, crawl web pages, extract relevant information, and index the content in Azure AI Search, enabling efficient and scalable web crawling and indexing.

Conclusion

This post has covered the implementation of Azure AI Search in Sitecore XM Cloud Next.js projects. We've explored:

1. A website crawler using Azure Functions and Service Bus for URL processing

2. A page crawling system for content extraction

3. An indexing setup for Azure AI Search

Code snippets and explanations have been provided to illustrate these core features, demonstrating how to create a scalable, AI-powered search solution for Sitecore sites.

This approach allows leveraging Azure AI Search features while maintaining flexibility in search implementation. It offers an alternative to Sitecore's built-in search capabilities, potentially enhancing your project's search functionality.

In the next part, I'll walk you through how building example front end implementation to build search components with Next.js in a typical Sitecore XM Cloud setup.

Useful links

- Azure Cognitive Search: What it is, features, and costs

- Azure AI Search: What is it, and how does it help my AI projects?

- Create an Azure AI Search solution

- Azure-Samples/functions-python-web-crawler

- Azure AI Search-Retrieval-Augmented Generation

- Azure AI Search - Cazton